Digital Realities are growing significantly in many industries today, and is taking user experiences to the next level. This blog elaborates on the pros, cons, and overall differences between augmented, virtual, and mixed reality, along with an overview of their respective architecture and developer tools.

By the end of this blog, we will have a clear understanding of the strengths and weaknesses of digital realities. The practice of Digital Realities consists of three pillars- Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR). Of the three, MR is the most impactful, with the use of tools like C#, Unity 3D, Blender, HoloLens, and Visual Studio.

What is Digital Reality?

Is VR + AR + MR = Digital Realities?

There has been plenty of buzz around virtual, augmented and mixed reality. Core Industries like Education, Engineering, Healthcare, and Entertainment are exploring the emerging technology to its fullest limits.

Let us first understand VR in the past – the first Virtual Reality experience was invented in 1837 by Charles Wheatstone, with the Stereoscopic Image, which could generate 2D and 3D images of the brain. Then, the Link Trainer came into the picture as the second VR experience, and the third-Sensorama, which didn’t create an impact in the market, due to its cost and size.

Let’s now take a look at the Modern Era VR in the period of 1980s/ 1990s, with bulky headsets and low quality graphics. Today, the applications and instruments we use are smaller in size, produce better sound, and graphics.

Therefore, the very aim of Virtual Reality is to replace the users’ reality with a fabricated one. But this proposition is not without its complications. A fully immersive Virtual Reality experience involves no visibility of the real world, where users can’t see where they are going owing to clunky navigation and may also result in motion sickness.

In order to take VR to the next level, one must smooth out the experience and deal with the challenges of lack of visibility, clunky navigation, and motion sickness. The ultimate aim of VR is to immerse the user completely in this world of one’s design.

When VR excludes the real world completely, we enter the realm of Augmented Reality (AR). AR uses the real world as a background for the digital experience. It uses a shape or symbol as a point of reference to trigger the entire user experience. The Augmented Reality experiment was first created in the 1990s in the US Air Force to improve human performance using fixtures. Today, AR is much more approachable and portable, and most AR experiments are performed on mobile devices. Marketing brochures where the user points the camera in a particular direction, or a game of cards, where the characters pop out are all instances of AR in action. Today, any device with a camera is a potential AR device. You could pair the AR device with a smartphone app to bring a complete, 360 degree experience.

With this information, industries and the academia alike, have started research projects on Augmented Reality. The main challenges of AR are Interaction with essentially an overlay of data. Capturing inputs from users is still a barrier, and the final challenge is educating users about the consumer market and the potential of AR. Whereas VR has made its mark, and most people understand the concept, AR is very commonly misunderstood. With the overlay of data, users will have a more holistic, immersive experience via AR than VR.

The new kid on the ‘digital reality’ block is Mixed Reality or MR, which is the merging of the real and the virtual ( digital world), to produce a new environment and visualization where physical and digital objects coexist and interact in real time. Where VR replaces the entire world of the user, and AR creates and overlays on the real world, MR takes it a step further.

Mixed Reality is the merging of real and virtual worlds to produce new environments and visualizations where physical and digital objects co-exist and interact in real time. This implies that actual physical objects are being used in the experience. Implementing the spatial mapping requires customized and specific hardware that isn’t found in augmented or virtual reality by default. Spatial mapping is done by using environmental cameras that are powered by UV light, or Infrared Light. Spatial mapping is what makes or breaks the experience of Mixed Reality, so the community of architects, designers, and developers should bring the most of spatial mapping to Mixed Reality applications, so that the experience is convincing and realistic.

VR vs. AR vs. MR

VR, AR and MR share many similarities such as digital 3D assets and the use of hardwares to project the experience, but they also have significant differences.

| Virtual Reality | Augmented Reality | Mixed Reality |

| Fully Digital | Data Overlay | Interaction |

| Tethering/Powerful PC | Smartphone/Tablet | Self Contained Device |

| Mapping needs to be done with external cameras | Surface tracking | Accuracy of 3D Model Apple AR Kit Google AR Core |

| Restricted Movement | Free Movement | Free Movement |

| Proprietary Hardware | Case by Case | Proven Collaboration |

| Unity 3D Unreal Engine Web VR | ARKit ARCore | HoloLens uses Unity and Visual Studio |

Development and Tooling

So far, we have discussed an overview of the digital realities – VR, AR and MR. Let’s now discuss the more technical aspects of the Digital Reality App.

VR Dev Tooling are many in the market; they include Unity 3D, Oculus Rift, Google Daydream, Samsung Gear VR, and HTC Vive. Unity is a commonly used tool, especially for hobbies and small projects. It is readily available, with even a free tier to explore the IDE at zero cost. Its benefits include great tutorials that are designed around 2D and 3D gaming, an active community, and asset stores. The programming languages used in Unity 3D are the JavaScript called Unity Script, C# Scripting, and Boo which is similar to Python. Another notable tool in the VR ecosystem is the Unreal Engine, which supports medium to large size enterprises; it uses the programming language C#, comes with a full scale editor,and even has a marketplace where you can acquire music, sound effects, and animations. Last but not the least, there is Web VR which can be used in any modern web browser and is based on the programming languages JavaScript and A-Frame. It can run 90 frames per second on a modern browser with API features and support.

As AR Tools use the real world, they should be adaptive to their surroundings. It therefore requires minimal 3D modelling. The traditional augmented experience has three steps- first, with the downloaded app, second with the trigger point and third, with the action. Apple ARKit and ARCore from Google are two exciting kits to build great AR projects. This ARKit is dependent on the True Depth camera and VIO ( Visual Inertial Odometry ). It can be navigated purely using a camera , while the ARCore uses the SLAM ( Simultaneous localization and mapping) approach.

MR Tools use two main ways to develop experiences HoloLens Apps and Immersive Apps. Both have the same development cycle, but slightly different versions of tooling. Whereas VR relies on the designer and developer to create the environment, and AR uses the camera and motion detection to understand the users’ world, MR is slightly more complex and sophisticated. HoloLens builds a 3D model of the real world using environmental cameras. In this way, the real world is mapped into a detailed 3D model made up of polygons and triangles. Once the model is ready,we can place the digital assets or holograms. Both HoloLens and Immersive apps are done using Unity 3D. So, Unity 3D is used for most of the MR app creation journey, with visual studio thrown into the mix of the development cycle.

We have now briefly understood the tooling choices for the digital realities. As 3D is a large component in VR, AR, as well as MR, it’s clear that we need a 3D tool to create the experience. Overall, we are left with two tooling choices for creating digital reality experiences – Unreal Engine and Unity 3D. If we need to create a MR or HoloLens app, the best option is Unity.

| Unity 3D | Unreal Engine |

| Works with VR, AR, and MR | Works with VR and AR |

| C# | C++ |

| Great Asset Stores | Average Marketplace |

| Simple and fast to get going | Complex and powerful |

Essential 3D modeling tools include Maya, which is of high quality, but expensive, and Blender, an open source tool including the entire development flow like modeling, rigging, animation, simulation, rendering, composition, and immersion tracking with video editing and game creation. Sculptris is another mature product, aimed for first time 3D modeling users. Another tool is SketchUp which allows 2D and 3D modeling, and even a Print and Export option.

Industrial Implementations

Let’s explore some of the industry implementations of the above discussed digital experiences, and the possibilities it holds for the future.

| Education | Manufacturing & Engineering | Healthcare |

| Inclusive and Collaborative teaching rather than one-way teaching | Boeing uses AR to teach engineers how to assemble wings | New arsenal of medical professionals |

| Personalized learning | Equipment maintenance | Improved hospital experience |

| Enhanced learning experience | Car Manufacturers use VR : Ford uses AR and MR in their factory production process | Controlled cost of care |

| Full size lab tailored to the student and syllabus | Fresh slant to predictive analytics | Pain Relief Physical Therapy Fears and Phobias |

| Each student can have their own environment | Avoid mistake via headset using Oculus Rift | Graded-exposure Therapy |

| VR – Google Tiltbrush AR – Brings drawing to life MR – iPad/ iPhone | Demand forecasting from POS | More Remote Access Improved Socioeconomic Life Diagnosis & Treatment |

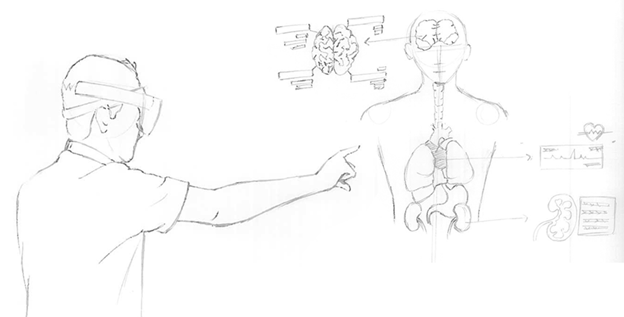

Microsoft HoloLens application in the Healthcare & Pharmaceutical industry holds great potential for visualizing patient health data.

To conclude, Digital Realities is just as promising as other emerging areas such as Big Data, Cloud, AI, and Blockchain. We must focus on the overlap of Digital Realities with these technologies, and how it can bring a truly unique and personalized experience for customers.

Happy Reading, Happy Sharing!

This content is the personal view of Kumar Chinnakali, Lead Architect @ Knowledge Lens, and does not represent the company, Knowledge Lens in any case.